GPT-2¶

- Inspired by Andrej Karpathy: "Let's reproduce GPT-2 (124M)."

- Primary links

- 1st OpenAI GPT-2 Blogpost: Better Language Models and their Implications

- 1st OpenAI GPT-2 Paper: Language Models are Unsupervised Multitask Learners

- 1st OpenAI GPT-2 Code: Github

- OpenAI GPT-3 Paper: Language Models are Few-Shot Learners

- Huggingface GPT-2 Code: Github

- Relevant Github repositories

Table of Contents¶

- 0. Introduction

- 1. GPT-2

nn.Module- 1.1. Loading the

huggingface/GPT-2Parameters - 1.2. Forward Pass: Get Logits

- 1.3.

sampling init,prefix tokens, Tokenization - 1.4. Sampling Loop

- 1.5. Sample, Auto-detect the Device

- 1.6. Model Training:

Data Batches (B,T)-->Logits (B,T,C) - 1.7. Cross Entropy Loss

- 1.8. Optimization Loop: Overfit a Single Branch

- 1.9. Data Loader Lite

- 1.10. Parameter Sharing:

wte&lm_head - 1.11. Model Initialization:

std 0.02,residual init

- 1.1. Loading the

- 2. Let's Make it Fast.

- 3. Model Optimization

- 3.1. Hyperparameters,

AdamW,gradient clipping - 3.2. Learning Rate Scheduler:

Warmup + Cosine Decay - 3.3. Batch Size Schedule, Weight Decay:

FusedAdamW,90ms - 3.4. Gradient Accumulation

- 3.5. Distributed Data Parallel (DPP)

- 3.6. Datasets used in

GPT-2,GPT-3,FineWeb(EDU) - 3.7. Validation Data Split, Validation Loss, Sampling Revive

- 3.8. Evaluation:

HellaSwag, Starting the Run

- 3.1. Hyperparameters,

- 4. Results!!!

Appendix¶

Figures¶

- A1. GPT-2 Scaling Laws: LAMBADA.

- A2. GPT-2 Model Architecture.

- A3. TensorFloat-32 (TF32).

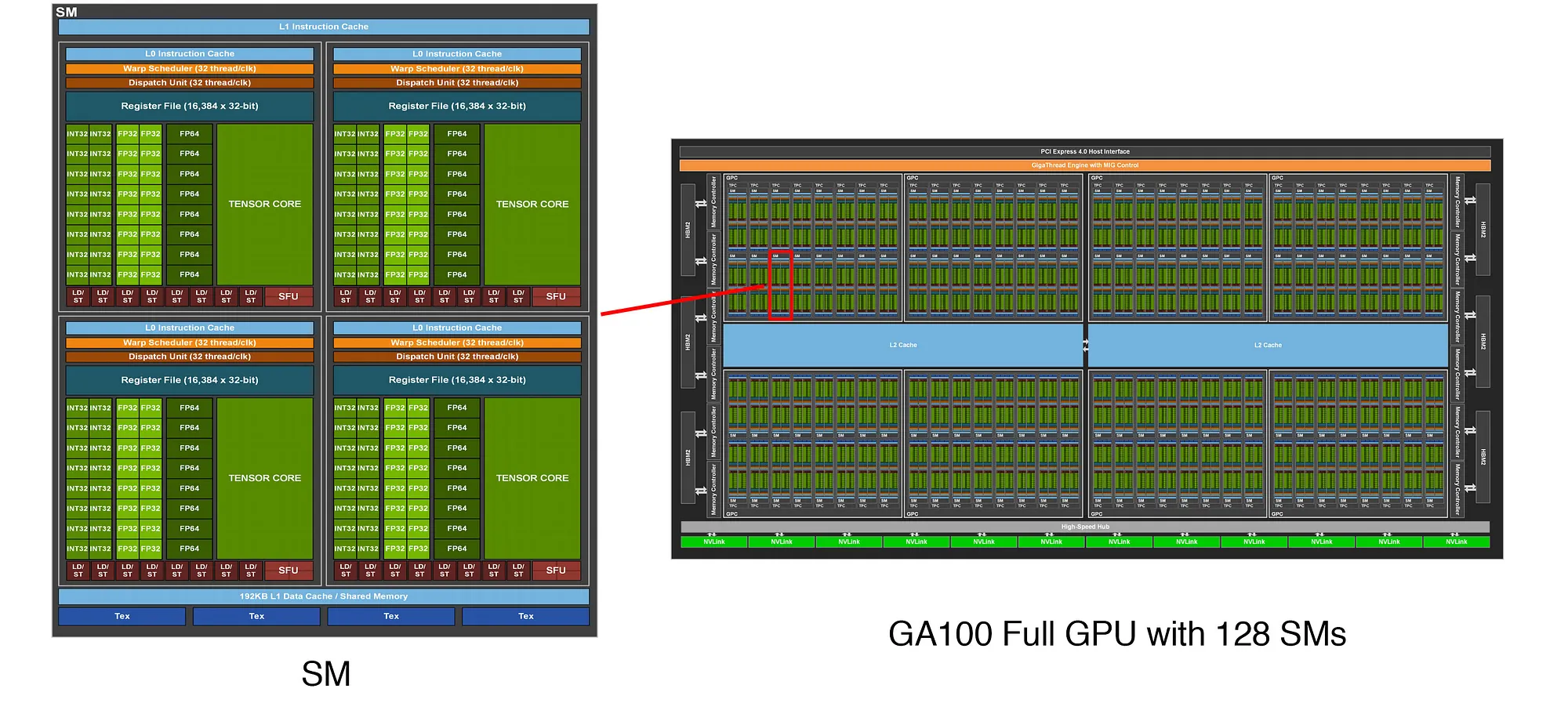

- A4. Tensor Cores: Fast Matrix Multiply-Add (FMMA) with FP16 Input and FP32 Compute Capabilities.

- A5. A Streaming Multiprocessor (SM) & A GA100 Full GPU with 128 SMs.

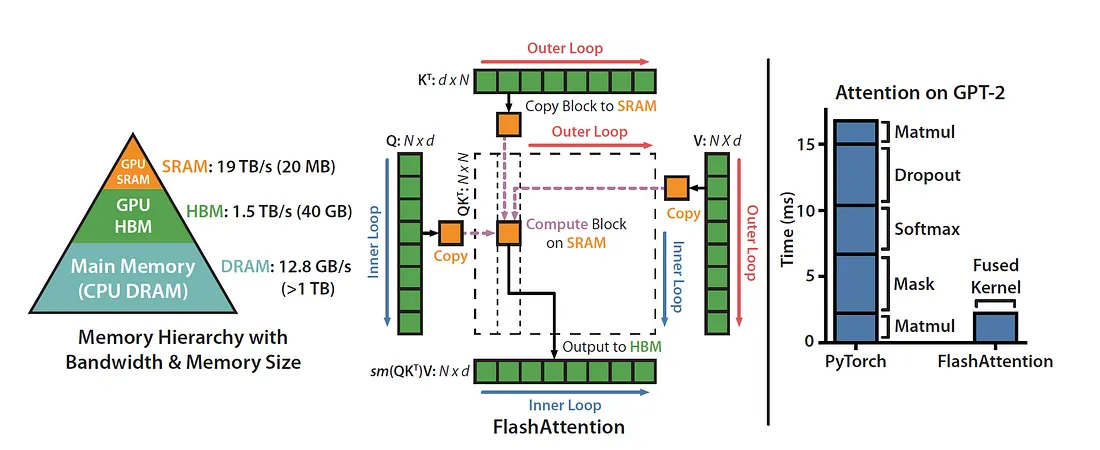

- A6. CPU-GPU Memory Management.

- A7. FlashAttention.

- A8. Kernel Fusion: A Comparison between Standard Attention and FlashAttention.

Tables¶

0. Introduction¶

We reproduce the GPT-2 (124M) from scratch. This video covers the whole process: First we build the GPT-2 network, then we optimize its training to be really fast, then we set up the training run following the GPT-2 and GPT-3 paper and their hyperparameters, then we hit run, and come back the next morning to see our results, and enjoy some amusing model generations. Keep in mind that in some places this video builds on the knowledge from earlier videos in the Neural networks: Zero to Hero playlist.

NVIDIA GPU Architectures: Precision Support + Memory Range + Kaggle Notebook Limitations¶

Caveat 1: Ampere GPU architecture isn't available as an option in Kaggle notebooks. The only GPU architectures in Kaggle notebooks are Pascal and Turing. As such the GPUs used in this GPT-2 implementation from scratch couldn't run

TF32, one of the primary initial upgrades, as a model training speedup improvement. Therefore, all of the training times here are slower than those in the original tutorial.

Caveat 2: The GPUs available in Kaggle notebooks have a smaller memory so we reduced batch size from 16 to 4 to ensure GPU fit (avoid out-of-memory error). This probably also makes the training times here slower.

Caveat 3: Unfortunately, Kaggle notebooks do not support launching multi-process jobs liketorchrun(which is required for Distributed Data Parallel across multiple GPUs). This is because Kaggle kernels are sandboxed and give you access to only one GPU, with no root shell or multi-GPU orchestration capability. As such sections 3.5 to 3.8 was not implemented in this notebook.

NVIDIA GPU Architecture Precision Support Table¶

This table summarizes precision support (TF32, FP32, FP16, BF16) for major NVIDIA GPU architectures, along with example GPUs and memory size ranges.

| Architecture | TF32 | FP32 | FP16 | BF16 | Example GPUs | Memory Size Range |

|---|---|---|---|---|---|---|

| Pascal | ❌ | ✅ | ⚠️ | ❌ | Tesla P100, GTX 1080 Ti | 8–16 GB (up to 24 GB on P40) |

| Volta | ❌ | ✅ | ✅ | ❌ | Tesla V100 | 16–32 GB HBM2 |

| Turing | ❌ | ✅ | ✅ | ❌ | RTX 2080 Ti, T4, Quadro RTX 6000 | 8–48 GB (Quadro) |

| Ampere | ✅ | ✅ | ✅ | ✅ | A100, RTX 3090, RTX A6000 | 16–80 GB (A100 up to 80 GB) |

| Ada (Lovelace) | ✅ | ✅ | ✅ | ✅ | RTX 4090, RTX 4080, RTX 6000 Ada | 16–48 GB |

| Hopper | ✅ | ✅ | ✅ | ✅ | H100 | 80–96 GB HBM3 |

Notes:¶

- Pascal (P100): Supports FP16 storage only, no Tensor Cores.

- Volta (V100): First to support Tensor Cores for FP16, but no TF32/BF16 support.

- Turing: Accelerated FP16 but lacks TF32/BF16 support.

- Ampere: Introduced TF32 and BF16 with Tensor Core support.

- Hopper: Top-tier support for TF32/BF16 and transformer acceleration.

🔎 Quick Legend¶

- ✅ — (YES) Fully supported in hardware.

- ❌ — (NO) Not supported in hardware.

- ⚠️ — (PARTIAL) Supported but without speedup (e.g., storage only or no tensor core support).

Model Overview¶

GPT-2 was released by OpenAI in 2019 with:

- A blog post

- A paper

- Open-source code on GitHub

There are 4 models in the GPT-2 mini-series:

124M, 355M, 774M, 1558M parameters

We'll focus on GPT-2 124M, which has:

12 layers768 hidden dimensions

0.1. GPT-2 (124M) OpenAI Checkpoint¶

Let's dive into OpenAI GPT-2.

Scaling Laws¶

GPT-2 exemplifies scaling laws:

- Model size (x-axis) vs. downstream task performance (y-axis)

- Larger models improve performance on tasks like translation, summarization, QA, etc.

Model Details and Training Targets¶

- Although GPT-2's code was in TensorFlow, we’ll use the HuggingFace Transformers version in PyTorch.

- Validation loss is used to measure the model’s ability to predict the next token on unseen data.

Figure 1. GPT-2 Scaling Laws: LAMBADA. (Source: Claude AI)

from transformers import GPT2LMHeadModel

2025-08-07 18:38:31.441973: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:477] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered WARNING: All log messages before absl::InitializeLog() is called are written to STDERR E0000 00:00:1754591911.604171 36 cuda_dnn.cc:8310] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered E0000 00:00:1754591911.652419 36 cuda_blas.cc:1418] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

model_hf = GPT2LMHeadModel.from_pretrained("gpt2") # 124M [gpt2-xl: 1558M]

sd_hf = model_hf.state_dict()

for k, v in sd_hf.items():

print(k, v.shape)

config.json: 0%| | 0.00/665 [00:00<?, ?B/s]

model.safetensors: 0%| | 0.00/548M [00:00<?, ?B/s]

generation_config.json: 0%| | 0.00/124 [00:00<?, ?B/s]

transformer.wte.weight torch.Size([50257, 768]) transformer.wpe.weight torch.Size([1024, 768]) transformer.h.0.ln_1.weight torch.Size([768]) transformer.h.0.ln_1.bias torch.Size([768]) transformer.h.0.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.0.attn.c_attn.bias torch.Size([2304]) transformer.h.0.attn.c_proj.weight torch.Size([768, 768]) transformer.h.0.attn.c_proj.bias torch.Size([768]) transformer.h.0.ln_2.weight torch.Size([768]) transformer.h.0.ln_2.bias torch.Size([768]) transformer.h.0.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.0.mlp.c_fc.bias torch.Size([3072]) transformer.h.0.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.0.mlp.c_proj.bias torch.Size([768]) transformer.h.1.ln_1.weight torch.Size([768]) transformer.h.1.ln_1.bias torch.Size([768]) transformer.h.1.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.1.attn.c_attn.bias torch.Size([2304]) transformer.h.1.attn.c_proj.weight torch.Size([768, 768]) transformer.h.1.attn.c_proj.bias torch.Size([768]) transformer.h.1.ln_2.weight torch.Size([768]) transformer.h.1.ln_2.bias torch.Size([768]) transformer.h.1.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.1.mlp.c_fc.bias torch.Size([3072]) transformer.h.1.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.1.mlp.c_proj.bias torch.Size([768]) transformer.h.2.ln_1.weight torch.Size([768]) transformer.h.2.ln_1.bias torch.Size([768]) transformer.h.2.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.2.attn.c_attn.bias torch.Size([2304]) transformer.h.2.attn.c_proj.weight torch.Size([768, 768]) transformer.h.2.attn.c_proj.bias torch.Size([768]) transformer.h.2.ln_2.weight torch.Size([768]) transformer.h.2.ln_2.bias torch.Size([768]) transformer.h.2.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.2.mlp.c_fc.bias torch.Size([3072]) transformer.h.2.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.2.mlp.c_proj.bias torch.Size([768]) transformer.h.3.ln_1.weight torch.Size([768]) transformer.h.3.ln_1.bias torch.Size([768]) transformer.h.3.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.3.attn.c_attn.bias torch.Size([2304]) transformer.h.3.attn.c_proj.weight torch.Size([768, 768]) transformer.h.3.attn.c_proj.bias torch.Size([768]) transformer.h.3.ln_2.weight torch.Size([768]) transformer.h.3.ln_2.bias torch.Size([768]) transformer.h.3.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.3.mlp.c_fc.bias torch.Size([3072]) transformer.h.3.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.3.mlp.c_proj.bias torch.Size([768]) transformer.h.4.ln_1.weight torch.Size([768]) transformer.h.4.ln_1.bias torch.Size([768]) transformer.h.4.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.4.attn.c_attn.bias torch.Size([2304]) transformer.h.4.attn.c_proj.weight torch.Size([768, 768]) transformer.h.4.attn.c_proj.bias torch.Size([768]) transformer.h.4.ln_2.weight torch.Size([768]) transformer.h.4.ln_2.bias torch.Size([768]) transformer.h.4.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.4.mlp.c_fc.bias torch.Size([3072]) transformer.h.4.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.4.mlp.c_proj.bias torch.Size([768]) transformer.h.5.ln_1.weight torch.Size([768]) transformer.h.5.ln_1.bias torch.Size([768]) transformer.h.5.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.5.attn.c_attn.bias torch.Size([2304]) transformer.h.5.attn.c_proj.weight torch.Size([768, 768]) transformer.h.5.attn.c_proj.bias torch.Size([768]) transformer.h.5.ln_2.weight torch.Size([768]) transformer.h.5.ln_2.bias torch.Size([768]) transformer.h.5.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.5.mlp.c_fc.bias torch.Size([3072]) transformer.h.5.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.5.mlp.c_proj.bias torch.Size([768]) transformer.h.6.ln_1.weight torch.Size([768]) transformer.h.6.ln_1.bias torch.Size([768]) transformer.h.6.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.6.attn.c_attn.bias torch.Size([2304]) transformer.h.6.attn.c_proj.weight torch.Size([768, 768]) transformer.h.6.attn.c_proj.bias torch.Size([768]) transformer.h.6.ln_2.weight torch.Size([768]) transformer.h.6.ln_2.bias torch.Size([768]) transformer.h.6.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.6.mlp.c_fc.bias torch.Size([3072]) transformer.h.6.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.6.mlp.c_proj.bias torch.Size([768]) transformer.h.7.ln_1.weight torch.Size([768]) transformer.h.7.ln_1.bias torch.Size([768]) transformer.h.7.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.7.attn.c_attn.bias torch.Size([2304]) transformer.h.7.attn.c_proj.weight torch.Size([768, 768]) transformer.h.7.attn.c_proj.bias torch.Size([768]) transformer.h.7.ln_2.weight torch.Size([768]) transformer.h.7.ln_2.bias torch.Size([768]) transformer.h.7.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.7.mlp.c_fc.bias torch.Size([3072]) transformer.h.7.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.7.mlp.c_proj.bias torch.Size([768]) transformer.h.8.ln_1.weight torch.Size([768]) transformer.h.8.ln_1.bias torch.Size([768]) transformer.h.8.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.8.attn.c_attn.bias torch.Size([2304]) transformer.h.8.attn.c_proj.weight torch.Size([768, 768]) transformer.h.8.attn.c_proj.bias torch.Size([768]) transformer.h.8.ln_2.weight torch.Size([768]) transformer.h.8.ln_2.bias torch.Size([768]) transformer.h.8.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.8.mlp.c_fc.bias torch.Size([3072]) transformer.h.8.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.8.mlp.c_proj.bias torch.Size([768]) transformer.h.9.ln_1.weight torch.Size([768]) transformer.h.9.ln_1.bias torch.Size([768]) transformer.h.9.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.9.attn.c_attn.bias torch.Size([2304]) transformer.h.9.attn.c_proj.weight torch.Size([768, 768]) transformer.h.9.attn.c_proj.bias torch.Size([768]) transformer.h.9.ln_2.weight torch.Size([768]) transformer.h.9.ln_2.bias torch.Size([768]) transformer.h.9.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.9.mlp.c_fc.bias torch.Size([3072]) transformer.h.9.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.9.mlp.c_proj.bias torch.Size([768]) transformer.h.10.ln_1.weight torch.Size([768]) transformer.h.10.ln_1.bias torch.Size([768]) transformer.h.10.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.10.attn.c_attn.bias torch.Size([2304]) transformer.h.10.attn.c_proj.weight torch.Size([768, 768]) transformer.h.10.attn.c_proj.bias torch.Size([768]) transformer.h.10.ln_2.weight torch.Size([768]) transformer.h.10.ln_2.bias torch.Size([768]) transformer.h.10.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.10.mlp.c_fc.bias torch.Size([3072]) transformer.h.10.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.10.mlp.c_proj.bias torch.Size([768]) transformer.h.11.ln_1.weight torch.Size([768]) transformer.h.11.ln_1.bias torch.Size([768]) transformer.h.11.attn.c_attn.weight torch.Size([768, 2304]) transformer.h.11.attn.c_attn.bias torch.Size([2304]) transformer.h.11.attn.c_proj.weight torch.Size([768, 768]) transformer.h.11.attn.c_proj.bias torch.Size([768]) transformer.h.11.ln_2.weight torch.Size([768]) transformer.h.11.ln_2.bias torch.Size([768]) transformer.h.11.mlp.c_fc.weight torch.Size([768, 3072]) transformer.h.11.mlp.c_fc.bias torch.Size([3072]) transformer.h.11.mlp.c_proj.weight torch.Size([3072, 768]) transformer.h.11.mlp.c_proj.bias torch.Size([768]) transformer.ln_f.weight torch.Size([768]) transformer.ln_f.bias torch.Size([768]) lm_head.weight torch.Size([50257, 768])

sd_hf["transformer.wpe.weight"].view(-1)[:20]

tensor([-0.0188, -0.1974, 0.0040, 0.0113, 0.0638, -0.1050, 0.0369, -0.1680,

-0.0491, -0.0565, -0.0025, 0.0135, -0.0042, 0.0151, 0.0166, -0.1381,

-0.0063, -0.0461, 0.0267, -0.2042])

import matplotlib.pyplot as plt

%matplotlib inline

plt.imshow(sd_hf["transformer.wpe.weight"], cmap="gray")

<matplotlib.image.AxesImage at 0x7fce8040ef50>

Visualization: Positional Embeddings¶

- Each row in the position embedding matrix corresponds to a position in the input (0–1023).

- Learned from scratch — model recovers sinusoidal-like structure over time.

- Early training shows noise; more training leads to smooth, structured embeddings.

Observations¶

- The positional embeddings resemble sinusoids as seen in "Attention Is All You Need", though they are learned (not fixed).

- Position affects attention: the model uses positional info to decide what to attend to.

plt.plot(sd_hf["transformer.wpe.weight"][:, 150])

plt.plot(sd_hf["transformer.wpe.weight"][:, 200])

plt.plot(sd_hf["transformer.wpe.weight"][:, 250])

[<matplotlib.lines.Line2D at 0x7fce80218210>]

# plt.imshow(sd_hf["transformer.h.1.attn.c_attn.weight"][:300,:300], cmap="gray")

# plt.show()

import numpy as np

w = sd_hf["transformer.h.1.attn.c_attn.weight"][:300, :300]

w_np = w.detach().cpu().numpy()

# Optional: normalize to [0,1]

# w_np = (w_np - w_np.min()) / (w_np.max() - w_np.min())

plt.imshow(w_np, cmap="gray")

plt.title("c_attn weight matrix")

plt.colorbar()

plt.show()

Sampling From the Model¶

Use HuggingFace Pipeline¶

from transformers import pipeline

generator = pipeline("text-generation", model="gpt2")

output = generator("Hello, I'm a language model,", max_length=30, num_return_sequences=5)

- Generates 5 completions from the same prompt.

Manual Sampling Process (From Scratch)¶

- Encode the prompt using

tiktoken:

import tiktoken

enc = tiktoken.get_encoding("gpt2")

tokens = enc.encode("Hello, I'm a language model,")

Replicate tokens across 5 sequences and move to GPU.

Generate new tokens iteratively:

- Forward pass to get logits

- Apply top-k sampling (k=50, HuggingFace default)

- Append sampled tokens

- Decode final output

✅ Despite differences in generation (due to internal HuggingFace pipeline quirks), the reproduced model produces coherent text and behaves as expected.

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='gpt2')

set_seed(42)

generator("Hello, I'm a language model,", max_length=30, num_return_sequences=5)

tokenizer_config.json: 0%| | 0.00/26.0 [00:00<?, ?B/s]

vocab.json: 0%| | 0.00/1.04M [00:00<?, ?B/s]

merges.txt: 0%| | 0.00/456k [00:00<?, ?B/s]

tokenizer.json: 0%| | 0.00/1.36M [00:00<?, ?B/s]

Device set to use cuda:0 Truncation was not explicitly activated but `max_length` is provided a specific value, please use `truncation=True` to explicitly truncate examples to max length. Defaulting to 'longest_first' truncation strategy. If you encode pairs of sequences (GLUE-style) with the tokenizer you can select this strategy more precisely by providing a specific strategy to `truncation`. Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation. Both `max_new_tokens` (=256) and `max_length`(=30) seem to have been set. `max_new_tokens` will take precedence. Please refer to the documentation for more information. (https://huggingface.co/docs/transformers/main/en/main_classes/text_generation)

[{'generated_text': "Hello, I'm a language model, so I can write things that are easy to understand with a little bit of code. But when you think of words, it's hard to think of words that are as simple as a little word in a sentence.\n\nSo I'm going to use a little bit of code, and I'll talk a little bit about the syntax.\n\nThis is how you write your first line:\n\n$trans = new $trans [1, 2, 3, 4]; $trans -> write ( 'Hello, I'm a language model, so I can write things that are easier to understand with a little bit of code.' );\n\nThis code is pretty simple, but it really doesn't have to be. We want to write this code in a few lines, and we're going to use an expression, which is a shorthand for the literal of the language.\n\nIn this case, we're going to use a variable named trans that's a symbol. We want to write this expression as an expression, where we're going to look for a line that matches the line we want to write.\n\nThe syntax for writing a line like this is very simple:\n\n$trans = new $trans [1, 2, 3, 4]; $"},

{'generated_text': "Hello, I'm a language model, I've had a lot of good experiences with it, I'm a native speaker. I've been working with it for almost five years.\n\nWe're working on different programming languages. I'm working on several different languages.\n\nDo I feel like I'm in a better position to work on this type of thing?\n\nNo. I don't feel like I'm in a better position to work on this type of thing. I feel like I'm in a better position to work on code that's actually good.\n\nIt's not like I'm the only person that's been able to master a language. Even if you're not a very good programmer, I'd be more inclined to be able to master that language. That's what I'm working on.\n\nDo you have any thoughts on the idea of using some of the other languages that are now out there, especially Clojure, Python, and even the Java language?\n\nNo, I don't think Clojure is a better language. It's just a better language. I don't think I've ever been able to understand it before. Clojure is a very well-developed language.\n\nI think it's fun to be able to work on other languages, and I think it"},

{'generated_text': 'Hello, I\'m a language model, I\'m an editor," the writer said. "But I don\'t think that\'s a good idea. I mean, what are you doing?"\n\nA few words from the man, who did not return calls.\n\nHe said she was "overworked" and that he had "made a mistake." "I think there\'s not one right answer," he said, adding that he had been told there were "no more questions."\n\nThe writer said the problem stemmed from her job as a writer in the online publication The New Yorker, where she was a part-time writer and editor.\n\nShe said she had been told that while she was happy to work at The New Yorker, her "job at this time was to write fiction and I was not. I thought I could have a full-time job at The New Yorker. I was wrong."\n\nThe writer\'s employer did not respond to a request for comment Friday.\n\nThe writer was a co-founder of G.I. Joe\'s magazine and a co-founder of the online publishing company Ado, which has its own website.\n\nA copy of G.I. Joe\'s website listed her as "a contributing editor and contributing editor to [The New Yorker]."'},

{'generated_text': 'Hello, I\'m a language model, and it\'s not about me. It\'s about people.\n\n"If you\'re a person and you want to tell people what a language is, you have to be able to tell them what the language is about."\n\nLang, who came to the UK with her mother, has been studying English since she was 8.\n\nShe says she is passionate about how to understand people and how they use language.\n\n"I\'m a language model, and it\'s not about me. It\'s about people. It\'s about people as far as I\'m concerned."\n\nShe says she\'s always been interested in learning English and how to express herself and the world around her.\n\nBut she also says she doesn\'t understand why some people don\'t understand her and her language.\n\n"What do you get when you talk about the world of your language?"\n\n"You get to know people and you know people speak more than you do, but you\'re not allowed to do that.\n\n"So you\'re not allowed to do that."\n\nTheresa May has repeatedly claimed she wants to "unite the world" and is working to create an "open-ended" international language system.\n\nBut the Government has'},

{'generated_text': 'Hello, I\'m a language model, not a language model. I\'m thinking of the languages in which we have formal semantics. One of my favorite languages is C#, which is the language of the language model. We\'re not talking about the semantics of a language model in a formal sense. We\'re talking about language models in which the language model is the only set of semantics that you can apply to any particular language.\n\nOne of the things I like about this kind of formal semantics is that it\'s a good way to develop a language model without having to go through languages that are not formal models. And I think you can do it with C#, which is not formal models.\n\nA lot of the things you will be interested in coming out of this are examples of non-formal semantics. I would like to talk about the second way that you can say, "I want to write this language model in C#."\n\nThere\'s a lot of things that you will be interested in. First of all, we have the language model. It\'s a language model, it\'s not a syntax model. We have a language model that we can do what we want. It\'s a language model that we can look at. It\'s a language model that you can apply to'}]

# let's instead sample manually

import torch

from torch.nn import functional as F

model_ = GPT2LMHeadModel.from_pretrained("gpt2") # 124M

model_.eval()

model_.to('cuda')

torch.manual_seed(42)

torch.cuda.manual_seed(42)

tokens = [15496, 11, 314, 1101, 257, 3303, 2746, 11] # "Hello, I'm a language model,"

tokens = torch.tensor(tokens, dtype=torch.long) # (8,)

tokens = tokens.unsqueeze(0).repeat(5, 1) # (5, 8)

x = tokens.to('cuda')

# generate!

while x.size(1) < 30: # max_length=30

# forward the model to get the logits

with torch.no_grad():

logits = model_(x)[0] # (B, T, vocab_size)

# take the logits at the last position

logits = logits[:, -1, :] # (B, vocab_size)

# get the probabilities

probs = F.softmax(logits, dim=-1)

# do top-k sampling of 50 (huggingface pipeline default)

# topk_probs here becomes (5, 50), topk_indices is (5, 50)

topk_probs, topk_indices = torch.topk(probs, 50, dim=-1)

# select a token from the top-k probabilities

# note: multinomial does not demand the input to sum to 1

ix = torch.multinomial(topk_probs, 1) # (B, 1)

# gather the corresponding indices

xcol = torch.gather(topk_indices, -1, ix) # (B, 1)

# append to the sequence

x = torch.cat((x, xcol), dim=1)

# print the generated text

import tiktoken

enc = tiktoken.get_encoding('gpt2')

for i in range(5):

tokens = x[i, :30].tolist()

decoded = enc.decode(tokens)

print(">", decoded)

> Hello, I'm a language model, not a program. So this morning I started studying for the interview in the lab. This was not > Hello, I'm a language model, and one of the main things that bothers me when they create languages is how easy it becomes to create something that > Hello, I'm a language model, and I wrote it off on the grounds that a language model would make me more fluent. But I'm not > Hello, I'm a language model, I really like languages. I like languages because like, they're good. And the way we talk about languages > Hello, I'm a language model, a language model I'm using for data modelling. All I did was test the results and then I wrote some

1. GPT-2 nn.Module¶

Goal¶

- Re-implement GPT-2 from scratch in PyTorch

- Load and validate OpenAI weights

- Eventually train the model from scratch and compare performance

Differences Between GPT-2 and Original Transformer¶

- GPT-2 is decoder-only: the encoder and cross-attention layers are removed.

- LayerNorms are moved before the attention and MLP blocks.

- An additional final LayerNorm is added before the classification head.

Figure 2: GPT-2 Model Architecture. (Source)

Model Skeleton (Architecture Overview)¶

Core container:

nn.ModuleDictwith the following:wte: token embeddingswpe: positional embeddingsh: list of transformer blocks (nn.ModuleListof 12 blocks)ln_f: final LayerNormlm_head: final linear projection layer (no bias)

class GPT2(nn.Module):

def __init__(self, config):

super().__init__()

self.wte = nn.Embedding(config.vocab_size, config.n_embd)

self.wpe = nn.Embedding(config.block_size, config.n_embd)

self.h = nn.ModuleList([Block(config) for _ in range(config.n_layer)])

self.ln_f = nn.LayerNorm(config.n_embd)

self.lm_head = nn.Linear(config.n_embd, config.vocab_size, bias=False)

Transformer Block Details¶

Each block contains:

- Pre-Norm residual paths

- Self-Attention followed by MLP

Attention = Communication Layer¶

- Query/Key/Value projections → scores → softmax → weighted sum

- Implemented efficiently using tensor gymnastics for parallelism

- Uses causal masking to ensure auto-regressive behavior

MLP = Per-token Processing¶

- Two linear layers with GELU (PyTorch doc.) non-linearity

self.c_fc = nn.Linear(n_embd, 4 * n_embd)

self.gelu = nn.GELU(approximate='tanh')

self.c_proj = nn.Linear(4 * n_embd, n_embd)

1.1. Loading the huggingface/GPT-2 Parameters¶

Loading and Matching GPT-2 Weights¶

Implement a custom GPT-2 class from scratch for full understanding.

Load OpenAI's GPT-2 weights into your class implementation:

- This confirms structural correctness

- Matching results with HuggingFace’s pretrained model confirms success

Step 1: Load Pretrained Model Using HuggingFace¶

from transformers import GPT2LMHeadModel

model = GPT2LMHeadModel.from_pretrained("gpt2")

gpt2refers to the 124M model; for 1.5B use"gpt2-xl".- This loads a PyTorch-friendly version of the pretrained model.

Step 2: Explore Model Weights¶

Use

.state_dict()to view raw tensors:- Token Embeddings:

[50257, 768]→ vocabulary size × embedding size - Position Embeddings:

[1024, 768]→ max sequence length × embedding size

- Token Embeddings:

Visualization: Positional Embeddings¶

- Learned sinusoidal-like patterns over time.

- Smooth after training, noisy at init.

1.2. Forward Pass: Get Logits¶

We need to add the forward pass to the model so we can generate logits.

def forward(self, idx):

b, t = idx.size()

token_embeddings = self.transformer.wte(idx) # (b, t, n_embd)

position_embeddings = self.transformer.wpe(torch.arange(0, t, device=idx.device)) # (t, n_embd)

x = token_embeddings + position_embeddings

for block in self.transformer.h:

x = block(x)

x = self.transformer.ln_f(x)

logits = self.lm_head(x) # (b, t, vocab_size)

return logits

import math

from dataclasses import dataclass

import torch

import torch.nn as nn

from torch.nn import functional as F

import tiktoken

# -----------------------------------------------------------------------------

class DataLoaderLite:

def __init__(self, B, T):

self.B = B

self.T = T

# at init load tokens from disk and store them in memory

with open('input.txt', 'r') as f:

text = f.read()

enc = tiktoken.get_encoding('gpt2')

tokens = enc.encode(text)

self.tokens = torch.tensor(tokens)

print(f"loaded {len(self.tokens)} tokens")

print(f"1 epoch = {len(self.tokens) // (B * T)} batches")

# state

self.current_position = 0

def next_batch(self):

B, T = self.B, self.T

buf = self.tokens[self.current_position : self.current_position+B*T+1]

x = (buf[:-1]).view(B, T) # inputs

y = (buf[1:]).view(B, T) # targets

# advance the position in the tensor

self.current_position += B * T

# if loading the next batch would be out of bounds, reset

if self.current_position + (B * T + 1) > len(self.tokens):

self.current_position = 0

return x, y

# -----------------------------------------------------------------------------

class CausalSelfAttention(nn.Module):

def __init__(self, config):

super().__init__()

assert config.n_embd % config.n_head == 0

# key, query, value projections for all heads, but in a batch

self.c_attn = nn.Linear(config.n_embd, 3 * config.n_embd)

# output projection

self.c_proj = nn.Linear(config.n_embd, config.n_embd)

self.c_proj.NANOGPT_SCALE_INIT = 1

# regularization

self.n_head = config.n_head

self.n_embd = config.n_embd

# not really a 'bias', more of a mask, but following the OpenAI/HF naming though

self.register_buffer("bias", torch.tril(torch.ones(config.block_size, config.block_size))

.view(1, 1, config.block_size, config.block_size))

def forward(self, x):

B, T, C = x.size() # batch size, sequence length, embedding dimensionality (n_embd)

# calculate query, key, values for all heads in batch and move head forward to be the batch dim

# nh is "number of heads", hs is "head size", and C (number of channels) = nh * hs

# e.g. in GPT-2 (124M), n_head=12, hs=64, so nh*hs=C=768 channels in the Transformer

qkv = self.c_attn(x)

q, k, v = qkv.split(self.n_embd, dim=2)

k = k.view(B, T, self.n_head, C // self.n_head).transpose(1, 2) # (B, nh, T, hs)

q = q.view(B, T, self.n_head, C // self.n_head).transpose(1, 2) # (B, nh, T, hs)

v = v.view(B, T, self.n_head, C // self.n_head).transpose(1, 2) # (B, nh, T, hs)

# attention (materializes the large (T,T) matrix for all the queries and keys)

att = (q @ k.transpose(-2, -1)) * (1.0 / math.sqrt(k.size(-1)))

att = att.masked_fill(self.bias[:,:,:T,:T] == 0, float('-inf'))

att = F.softmax(att, dim=-1)

y = att @ v # (B, nh, T, T) x (B, nh, T, hs) -> (B, nh, T, hs)

y = y.transpose(1, 2).contiguous().view(B, T, C) # re-assemble all head outputs side by side

# output projection

y = self.c_proj(y)

return y

# -----------------------------------------------------------------------------

class MLP(nn.Module):

def __init__(self, config):

super().__init__()

self.c_fc = nn.Linear(config.n_embd, 4 * config.n_embd)

self.gelu = nn.GELU(approximate='tanh')

self.c_proj = nn.Linear(4 * config.n_embd, config.n_embd)

self.c_proj.NANOGPT_SCALE_INIT = 1

def forward(self, x):

x = self.c_fc(x)

x = self.gelu(x)

x = self.c_proj(x)

return x

# -----------------------------------------------------------------------------

class Block(nn.Module):

def __init__(self, config):

super().__init__()

self.ln_1 = nn.LayerNorm(config.n_embd)

self.attn = CausalSelfAttention(config)

self.ln_2 = nn.LayerNorm(config.n_embd)

self.mlp = MLP(config)

def forward(self, x):

x = x + self.attn(self.ln_1(x))

x = x + self.mlp(self.ln_2(x))

return x

# -----------------------------------------------------------------------------

@dataclass

class GPTConfig:

block_size: int = 1024 # max sequence length

vocab_size: int = 50257 # number of tokens: 50,000 BPE merges + 256 bytes tokens + 1 <|endoftext|> token

n_layer: int = 12 # number of layers

n_head: int = 12 # number of heads

n_embd: int = 768 # embedding dimension

class GPT(nn.Module):

def __init__(self, config):

super().__init__()

self.config = config

self.transformer = nn.ModuleDict(dict(

wte = nn.Embedding(config.vocab_size, config.n_embd),

wpe = nn.Embedding(config.block_size, config.n_embd),

h = nn.ModuleList([Block(config) for _ in range(config.n_layer)]),

ln_f = nn.LayerNorm(config.n_embd),

))

self.lm_head = nn.Linear(config.n_embd, config.vocab_size, bias=False)

def forward(self, idx):

# idx is of shape (B, T)

B, T = idx.size()

assert T <= self.config.block_size, f"Cannot forward sequence of length {T}, block size is only {self.config.block_size}"

# forward the token and posisition embeddings

pos = torch.arange(0, T, dtype=torch.long, device=idx.device) # shape (T)

pos_emb = self.transformer.wpe(pos) # position embeddings of shape (T, n_embd)

tok_emb = self.transformer.wte(idx) # token embeddings of shape (B, T, n_embd)

x = tok_emb + pos_emb

# forward the blocks of the transformer

for block in self.transformer.h:

x = block(x)

# forward the final layernorm and the classifier

x = self.transformer.ln_f(x)

logits = self.lm_head(x) # (B, T, vocab_size)

return logits

@classmethod

def from_pretrained(cls, model_type):

"""Loads pretrained GPT-2 model weights from huggingface"""

assert model_type in {'gpt2', 'gpt2-medium', 'gpt2-large', 'gpt2-xl'}

from transformers import GPT2LMHeadModel

print("loading weights from pretrained gpt: %s" % model_type)

# n_layer, n_head and n_embd are determined from model_type

config_args = {

'gpt2': dict(n_layer=12, n_head=12, n_embd=768), # 124M params

'gpt2-medium': dict(n_layer=24, n_head=16, n_embd=1024), # 350M params

'gpt2-large': dict(n_layer=36, n_head=20, n_embd=1280), # 774M params

'gpt2-xl': dict(n_layer=48, n_head=25, n_embd=1600), # 1558M params

}[model_type]

config_args['vocab_size'] = 50257 # always 50257 for GPT model checkpoints

config_args['block_size'] = 1024 # always 1024 for GPT model checkpoints

# create a from-scratch initialized minGPT model

config = GPTConfig(**config_args)

model = GPT(config)

sd = model.state_dict()

sd_keys = sd.keys()

sd_keys = [k for k in sd_keys if not k.endswith('.attn.bias')] # discard this mask / buffer, not a param

# init a huggingface/transformers model

model_hf = GPT2LMHeadModel.from_pretrained(model_type)

sd_hf = model_hf.state_dict()

# copy while ensuring all of the parameters are aligned and match in names and shapes

sd_keys_hf = sd_hf.keys()

sd_keys_hf = [k for k in sd_keys_hf if not k.endswith('.attn.masked_bias')] # ignore these, just a buffer

sd_keys_hf = [k for k in sd_keys_hf if not k.endswith('.attn.bias')] # same, just the mask (buffer)

transposed = ['attn.c_attn.weight', 'attn.c_proj.weight', 'mlp.c_fc.weight', 'mlp.c_proj.weight']

# basically the openai checkpoints use a "Conv1D" module, but we only want to use a vanilla Linear

# this means that we have to transpose these weights when we import them

assert len(sd_keys_hf) == len(sd_keys), f"mismatched keys: {len(sd_keys_hf)} != {len(sd_keys)}"

for k in sd_keys_hf:

if any(k.endswith(w) for w in transposed):

# special treatment for the Conv1D weights we need to transpose

assert sd_hf[k].shape[::-1] == sd[k].shape

with torch.no_grad():

sd[k].copy_(sd_hf[k].t())

else:

# vanilla copy over the other parameters

assert sd_hf[k].shape == sd[k].shape

with torch.no_grad():

sd[k].copy_(sd_hf[k])

return model

# -----------------------------------------------------------------------------

1.3. sampling init, prefix tokens, Tokenization¶

import tiktoken

enc = tiktoken.get_encoding("gpt2")

tokens = enc.encode("Hello, I'm a language model,")

- Input prompt encoded to tokens

- Tokens replicated across batch

1.4. Sampling Loop¶

logits = model(x)

logits = logits[:, -1, :]

probs = F.softmax(logits, dim=-1)

x_next = torch.multinomial(probs, num_samples=1)

x = torch.cat((x, x_next), dim=1)

Generation Loop (Manual Sampling)¶

Prefix tokens initialized and extended one token at a time

At each step:

- Forward pass

- Extract last timestep's logits

- Apply softmax and top-k filtering

- Sample and append next token to sequence

Result: a growing tensor

xof shape(batch_size, current_length)

num_return_sequences = 5

max_length = 30

model = GPT.from_pretrained('gpt2')

model.eval()

model.to('cuda')

# prefix tokens

import tiktoken

enc = tiktoken.get_encoding('gpt2')

tokens = enc.encode("Hello, I'm a language model,")

tokens = torch.tensor(tokens, dtype=torch.long) # (8,)

tokens = tokens.unsqueeze(0).repeat(num_return_sequences, 1) # (5, 8)

x = tokens.to('cuda')

# generate! right now x is (B, T) where B = 5, T = 8

# set the seed to 42

torch.manual_seed(42)

torch.cuda.manual_seed(42)

while x.size(1) < max_length:

# forward the model to get the logits

with torch.no_grad():

logits = model(x) # (B, T, vocab_size)

# take the logits at the last position

logits = logits[:, -1, :] # (B, vocab_size)

# get the probabilities

probs = F.softmax(logits, dim=-1)

# do top-k sampling of 50 (huggingface pipeline default)

# topk_probs here becomes (5, 50), topk_indices is (5, 50)

topk_probs, topk_indices = torch.topk(probs, 50, dim=-1)

# select a token from the top-k probabilities

# note: multinomial does not demand the input to sum to 1

ix = torch.multinomial(topk_probs, 1) # (B, 1)

# gather the corresponding indices

xcol = torch.gather(topk_indices, -1, ix) # (B, 1)

# append to the sequence

x = torch.cat((x, xcol), dim=1)

# print the generated text

for i in range(num_return_sequences):

tokens = x[i, :max_length].tolist()

decoded = enc.decode(tokens)

print(">", decoded)

loading weights from pretrained gpt: gpt2 > Hello, I'm a language model, not a program. So this morning I started studying for the interview in the lab. This was not > Hello, I'm a language model, and one of the main things that bothers me when they create languages is how easy it becomes to create something that > Hello, I'm a language model, and I wrote it off on the grounds that a language model would make me more fluent. But I'm not > Hello, I'm a language model, I really like languages. I like languages because like, they're good. And the way we talk about languages > Hello, I'm a language model, a language model I'm using for data modelling. All I did was test the results and then I wrote some

1.5. Sample, Auto-detect the Device¶

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = model.to(device)

x = tokens.to(device)

- Default to GPU when available

- Enable fast matrix operations and parallelism

# attempt to autodetect the device

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

num_return_sequences = 5

max_length = 30

model = GPT(GPTConfig())

model.eval()

model.to(device)

# prefix tokens

import tiktoken

enc = tiktoken.get_encoding('gpt2')

tokens = enc.encode("Hello, I'm a language model,")

tokens = torch.tensor(tokens, dtype=torch.long) # (8,)

tokens = tokens.unsqueeze(0).repeat(num_return_sequences, 1) # (5, 8)

x = tokens.to(device)

# generate! right now x is (B, T) where B = 5, T = 8

# set the seed to 42

torch.manual_seed(42)

torch.cuda.manual_seed(42)

while x.size(1) < max_length:

# forward the model to get the logits

with torch.no_grad():

logits = model(x) # (B, T, vocab_size)

# take the logits at the last position

logits = logits[:, -1, :] # (B, vocab_size)

# get the probabilities

probs = F.softmax(logits, dim=-1)

# do top-k sampling of 50 (huggingface pipeline default)

# topk_probs here becomes (5, 50), topk_indices is (5, 50)

topk_probs, topk_indices = torch.topk(probs, 50, dim=-1)

# select a token from the top-k probabilities

# note: multinomial does not demand the input to sum to 1

ix = torch.multinomial(topk_probs, 1) # (B, 1)

# gather the corresponding indices

xcol = torch.gather(topk_indices, -1, ix) # (B, 1)

# append to the sequence

x = torch.cat((x, xcol), dim=1)

# print the generated text

for i in range(num_return_sequences):

tokens = x[i, :max_length].tolist()

decoded = enc.decode(tokens)

print(">", decoded)

using device: cuda > Hello, I'm a language model, electronics sped Links Alternatively aerobic baptism Its know des cautiously exerciseBasically Simpson Patrol qual arbitration PIDTown decksDamn You Pegasus > Hello, I'm a language model, artist sou losMHz Gadget textedoidal Ezekielminus 141 Lifhari domain Annie Kushicit populism wealth alliances archaic calib rich > Hello, I'm a language model, sonicedomost declared-$21Mrswild PlainsIron fut jung cannon sorcererFour practical Grac worstannot bothered Containerstadt > Hello, I'm a language model, tranquiloneliness Policyicking congregation gunned FL stressesFactor restraining Rusty fermented Missileanguard viewing adjusting reopenWilliamsrowdWarrenattack hen > Hello, I'm a language model,alpha 520 Follow designate Main zincoraVOLOver855 procession equippediem dean Turtles vocyah================================================================ressoririn situations RIS

1.6. Model Training: Data Batches (B,T) --> Logits (B,T,C)¶

Use a text dataset like TinyShakespeare.

Tokenize the full text, split into fixed-size sequences of shape

(B, T).During training, model predicts next token for each position:

xinput → model → logits (shape:(B, T, vocab_size))

Training Setup¶

- Labels are inputs shifted left by one

- Use cross-entropy loss:

loss = F.cross_entropy(logits.view(-1, logits.size(-1)), targets.view(-1))

Training loop:¶

- Forward pass

- Compute loss

loss.backward()optimizer.step()optimizer.zero_grad()

1.7. Cross Entropy Loss¶

- Align the targets by shifting

xby one position. - Flatten logits and targets for compatibility with loss function:

logits.view(-1, logits.size(-1))--> shape:(B*T, vocab_size)targets.view(-1)--> shape:(B*T)

loss = F.cross_entropy(logits.view(-1, logits.size(-1)), targets.view(-1))

- Efficient calculation across all time steps and batches.

class GPT(nn.Module):

def __init__(self, config):

super().__init__()

self.config = config

self.transformer = nn.ModuleDict(dict(

wte = nn.Embedding(config.vocab_size, config.n_embd),

wpe = nn.Embedding(config.block_size, config.n_embd),

h = nn.ModuleList([Block(config) for _ in range(config.n_layer)]),

ln_f = nn.LayerNorm(config.n_embd),

))

self.lm_head = nn.Linear(config.n_embd, config.vocab_size, bias=False)

# weight sharing scheme

self.transformer.wte.weight = self.lm_head.weight

# init params

self.apply(self._init_weights)

def _init_weights(self, module):

if isinstance(module, nn.Linear):

std = 0.02

if hasattr(module, 'NANOGPT_SCALE_INIT'):

std *= (2 * self.config.n_layer) ** -0.5

torch.nn.init.normal_(module.weight, mean=0.0, std=std)

if module.bias is not None:

torch.nn.init.zeros_(module.bias)

elif isinstance(module, nn.Embedding):

torch.nn.init.normal_(module.weight, mean=0.0, std=0.02)

def forward(self, idx, targets=None):

# idx is of shape (B, T)

B, T = idx.size()

assert T <= self.config.block_size, f"Cannot forward sequence of length {T}, block size is only {self.config.block_size}"

# forward the token and posisition embeddings

pos = torch.arange(0, T, dtype=torch.long, device=idx.device) # shape (T)

pos_emb = self.transformer.wpe(pos) # position embeddings of shape (T, n_embd)

tok_emb = self.transformer.wte(idx) # token embeddings of shape (B, T, n_embd)

x = tok_emb + pos_emb

# forward the blocks of the transformer

for block in self.transformer.h:

x = block(x)

# forward the final layernorm and the classifier

x = self.transformer.ln_f(x)

logits = self.lm_head(x) # (B, T, vocab_size)

loss = None

if targets is not None:

loss = F.cross_entropy(logits.view(-1, logits.size(-1)), targets.view(-1))

return logits, loss

@classmethod

def from_pretrained(cls, model_type):

"""Loads pretrained GPT-2 model weights from huggingface"""

assert model_type in {'gpt2', 'gpt2-medium', 'gpt2-large', 'gpt2-xl'}

from transformers import GPT2LMHeadModel

print("loading weights from pretrained gpt: %s" % model_type)

# n_layer, n_head and n_embd are determined from model_type

config_args = {

'gpt2': dict(n_layer=12, n_head=12, n_embd=768), # 124M params

'gpt2-medium': dict(n_layer=24, n_head=16, n_embd=1024), # 350M params

'gpt2-large': dict(n_layer=36, n_head=20, n_embd=1280), # 774M params

'gpt2-xl': dict(n_layer=48, n_head=25, n_embd=1600), # 1558M params

}[model_type]

config_args['vocab_size'] = 50257 # always 50257 for GPT model checkpoints

config_args['block_size'] = 1024 # always 1024 for GPT model checkpoints

# create a from-scratch initialized minGPT model

config = GPTConfig(**config_args)

model = GPT(config)

sd = model.state_dict()

sd_keys = sd.keys()

sd_keys = [k for k in sd_keys if not k.endswith('.attn.bias')] # discard this mask / buffer, not a param

# init a huggingface/transformers model

model_hf = GPT2LMHeadModel.from_pretrained(model_type)

sd_hf = model_hf.state_dict()

# copy while ensuring all of the parameters are aligned and match in names and shapes

sd_keys_hf = sd_hf.keys()

sd_keys_hf = [k for k in sd_keys_hf if not k.endswith('.attn.masked_bias')] # ignore these, just a buffer

sd_keys_hf = [k for k in sd_keys_hf if not k.endswith('.attn.bias')] # same, just the mask (buffer)

transposed = ['attn.c_attn.weight', 'attn.c_proj.weight', 'mlp.c_fc.weight', 'mlp.c_proj.weight']

# basically the openai checkpoints use a "Conv1D" module, but we only want to use a vanilla Linear

# this means that we have to transpose these weights when we import them

assert len(sd_keys_hf) == len(sd_keys), f"mismatched keys: {len(sd_keys_hf)} != {len(sd_keys)}"

for k in sd_keys_hf:

if any(k.endswith(w) for w in transposed):

# special treatment for the Conv1D weights we need to transpose

assert sd_hf[k].shape[::-1] == sd[k].shape

with torch.no_grad():

sd[k].copy_(sd_hf[k].t())

else:

# vanilla copy over the other parameters

assert sd_hf[k].shape == sd[k].shape

with torch.no_grad():

sd[k].copy_(sd_hf[k])

return model

# -----------------------------------------------------------------------------

# tiny shakespeare dataset

!wget https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

with open('input.txt', 'r') as f:

text = f.read()

data = text[:1000] # first 1,000 characters

print(data[:100])

--2025-08-07 18:39:01-- https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.110.133, 185.199.109.133, ... Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 1115394 (1.1M) [text/plain] Saving to: ‘input.txt’ input.txt 100%[===================>] 1.06M --.-KB/s in 0.05s 2025-08-07 18:39:01 (20.8 MB/s) - ‘input.txt’ saved [1115394/1115394]

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

First Citizen: Before we proceed any further, hear me speak. All: Speak, speak. First Citizen: You

!wc input.txt # 40000 lines ~202K words, ~1.1million bytes

40000 202651 1115394 input.txt

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

enc = tiktoken.get_encoding('gpt2')

tokens = enc.encode(data)

print(tokens[:24])

[5962, 22307, 25, 198, 8421, 356, 5120, 597, 2252, 11, 3285, 502, 2740, 13, 198, 198, 3237, 25, 198, 5248, 461, 11, 2740, 13]

import torch

buf = torch.tensor(tokens[:24 + 1])

x = buf[:-1].view(4, 6)

y = buf[1:].view(4, 6)

print(x)

print(y)

tensor([[ 5962, 22307, 25, 198, 8421, 356],

[ 5120, 597, 2252, 11, 3285, 502],

[ 2740, 13, 198, 198, 3237, 25],

[ 198, 5248, 461, 11, 2740, 13]])

tensor([[22307, 25, 198, 8421, 356, 5120],

[ 597, 2252, 11, 3285, 502, 2740],

[ 13, 198, 198, 3237, 25, 198],

[ 5248, 461, 11, 2740, 13, 198]])

# attempt to autodetect the device

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

device = "cpu" #override

# get a data batch

import tiktoken

enc = tiktoken.get_encoding('gpt2')

with open('input.txt', 'r') as f:

text = f.read()

text = text[:1000]

tokens = enc.encode(text)

B, T = 4, 32

buf = torch.tensor(tokens[:B*T + 1])

x = buf[:-1].view(B, T)

y = buf[1:].view(B, T)

# get logits

model = GPT(GPTConfig())

model.to(device)

logits, loss = model(x, y)

print(loss)

# import sys; sys.exit(0)

# # prefix tokens

# import tiktoken

# enc = tiktoken.get_encoding('gpt2')

# model.eval()

# num_return_sequences = 5

# max_length = 30

# tokens = enc.encode("Hello, I'm a language model,")

# tokens = torch.tensor(tokens, dtype=torch.long) # (8,)

# tokens = tokens.unsqueeze(0).repeat(num_return_sequences, 1) # (5, 8)

# x = tokens.to(device)

# # generate! right now x is (B, T) where B = 5, T = 8

# # set the seed to 42

# torch.manual_seed(42)

# torch.cuda.manual_seed(42)

# while x.size(1) < max_length:

# # forward the model to get the logits

# with torch.no_grad():

# logits = model(x) # (B, T, vocab_size)

# # take the logits at the last position

# logits = logits[:, -1, :] # (B, vocab_size)

# # get the probabilities

# probs = F.softmax(logits, dim=-1)

# # do top-k sampling of 50 (huggingface pipeline default)

# # topk_probs here becomes (5, 50), topk_indices is (5, 50)

# topk_probs, topk_indices = torch.topk(probs, 50, dim=-1)

# # select a token from the top-k probabilities

# # note: multinomial does not demand the input to sum to 1

# ix = torch.multinomial(topk_probs, 1) # (B, 1)

# # gather the corresponding indices

# xcol = torch.gather(topk_indices, -1, ix) # (B, 1)

# # append to the sequence

# x = torch.cat((x, xcol), dim=1)

# # print the generated text

# for i in range(num_return_sequences):

# tokens = x[i, :max_length].tolist()

# decoded = enc.decode(tokens)

# print(">", decoded)

using device: cuda tensor(11.0088, grad_fn=<NllLossBackward0>)

Expected loss at initialization based on uniform probability:¶

$$ \text{loss} = -\ln\left(\frac{1}{\text{vocab\_size}}\right) = -\ln(\frac{1}{50257}) = 10.824 $$

- The expected loss is close to what we got ($\boldsymbol{10.82}$ vs $\mathbf{11.01}$) so our initialization is good to go.

1.8. Optimization Loop: Overfit a Single Branch¶

- Start training on a single batch to verify correctness.

- We use

AdamW(PyTorch doc.) as the optimization algorithmlr= $\boldsymbol{3 \times 10^{-4}}$ is a pretty good default for most optimization runs @ a very early debugging stage.

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for step in range(max_iters):

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

print(f"step {i}, loss: {loss.item()}")

- If training works (loss decreases), scaling to the full dataset is safe.

# attempt to autodetect the device

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

# get a data batch

import tiktoken

enc = tiktoken.get_encoding('gpt2')

with open('input.txt', 'r') as f:

text = f.read()

text = text[:1000]

tokens = enc.encode(text)

B, T = 4, 32

buf = torch.tensor(tokens[:B*T + 1])

buf = buf.to(device)

x = buf[:-1].view(B, T)

y = buf[1:].view(B, T)

# get logits

model = GPT(GPTConfig())

model.to(device)

# logits, loss = model(x, y)

# print(loss)

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for i in range(50):

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

print(f"step {i}, loss: {loss.item()}")

using device: cuda step 0, loss: 10.769306182861328 step 1, loss: 8.232959747314453 step 2, loss: 7.903288841247559 step 3, loss: 7.477176666259766 step 4, loss: 7.222799777984619 step 5, loss: 6.974076747894287 step 6, loss: 6.690158367156982 step 7, loss: 6.36968469619751 step 8, loss: 6.096214294433594 step 9, loss: 5.825082778930664 step 10, loss: 5.520833492279053 step 11, loss: 5.2743821144104 step 12, loss: 4.86961555480957 step 13, loss: 7.977803707122803 step 14, loss: 4.721469402313232 step 15, loss: 4.726749420166016 step 16, loss: 4.6562018394470215 step 17, loss: 4.5155439376831055 step 18, loss: 4.344297409057617 step 19, loss: 4.195880889892578 step 20, loss: 4.066141605377197 step 21, loss: 3.942348003387451 step 22, loss: 3.8445816040039062 step 23, loss: 3.771299123764038 step 24, loss: 3.695563316345215 step 25, loss: 3.6219353675842285 step 26, loss: 3.586193084716797 step 27, loss: 3.499006986618042 step 28, loss: 3.433870315551758 step 29, loss: 3.3526525497436523 step 30, loss: 3.2975974082946777 step 31, loss: 3.2394254207611084 step 32, loss: 3.1776838302612305 step 33, loss: 3.130887269973755 step 34, loss: 3.067812442779541 step 35, loss: 2.990708827972412 step 36, loss: 2.9151458740234375 step 37, loss: 2.8503098487854004 step 38, loss: 2.8130929470062256 step 39, loss: 2.8470816612243652 step 40, loss: 3.2798235416412354 step 41, loss: 3.1968610286712646 step 42, loss: 2.7217764854431152 step 43, loss: 2.9068920612335205 step 44, loss: 2.5936026573181152 step 45, loss: 2.48004150390625 step 46, loss: 2.546128988265991 step 47, loss: 2.523922920227051 step 48, loss: 2.3874573707580566 step 49, loss: 2.2789361476898193

1.9. Data Loader Lite¶

The DataLoaderLite is a simple data loader that iterates through the dataset in chunks. It's designed to be efficient by fetching B*T + 1 tokens per batch to ensure the next token is available for loss calculation, and it wraps around to the beginning of the dataset if it reaches the end.

- Simple token-level data loader:

- Slice tokens into

(x, y)pairs x = tokens[i:i+T],y = tokens[i+1:i+T+1]

- Slice tokens into

- Iterate in batches:

(B, T)slices - Great for debugging and small-scale training.

# attempt to autodetect the device

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

# get a data batch

train_loader = DataLoaderLite(B=4, T=32)

# get logits

model = GPT(GPTConfig())

model.to(device)

# logits, loss = model(x, y)

# print(loss)

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for i in range(50):

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

print(f"step {i}, loss: {loss.item()}")

using device: cuda loaded 338025 tokens 1 epoch = 2640 batches step 0, loss: 11.054346084594727 step 1, loss: 9.784205436706543 step 2, loss: 9.536847114562988 step 3, loss: 9.280383110046387 step 4, loss: 8.653818130493164 step 5, loss: 8.457844734191895 step 6, loss: 9.097246170043945 step 7, loss: 8.77798080444336 step 8, loss: 8.161457061767578 step 9, loss: 8.045823097229004 step 10, loss: 8.33431625366211 step 11, loss: 7.487844944000244 step 12, loss: 7.906373500823975 step 13, loss: 7.508420467376709 step 14, loss: 7.557555675506592 step 15, loss: 7.272642612457275 step 16, loss: 7.357736587524414 step 17, loss: 8.298192977905273 step 18, loss: 7.260444641113281 step 19, loss: 7.876038074493408 step 20, loss: 7.639876842498779 step 21, loss: 7.8305745124816895 step 22, loss: 6.4622602462768555 step 23, loss: 6.927628993988037 step 24, loss: 6.882352352142334 step 25, loss: 6.784662246704102 step 26, loss: 6.8236894607543945 step 27, loss: 7.606111526489258 step 28, loss: 7.201181411743164 step 29, loss: 6.976284503936768 step 30, loss: 7.04829740524292 step 31, loss: 7.329046249389648 step 32, loss: 7.218647480010986 step 33, loss: 7.086764335632324 step 34, loss: 7.988156318664551 step 35, loss: 7.906185626983643 step 36, loss: 7.785394191741943 step 37, loss: 7.733238697052002 step 38, loss: 8.147987365722656 step 39, loss: 7.602220058441162 step 40, loss: 7.475622653961182 step 41, loss: 7.066772937774658 step 42, loss: 7.190559387207031 step 43, loss: 7.182857990264893 step 44, loss: 7.161768436431885 step 45, loss: 7.199093818664551 step 46, loss: 6.208998680114746 step 47, loss: 6.304914951324463 step 48, loss: 6.973421096801758 step 49, loss: 6.742049694061279

1.10. Parameter Sharing: wte & lm_head¶

- Weight tying between embedding layer and output projection layer:

# weight sharing scheme

self.transformer.wte.weight = self.lm_head.weight

- Reduces parameter count, ensures consistency and often improves performance.

- Output logits become a function of dot product with input embeddings.

print(sd_hf["lm_head.weight"].shape)

print(sd_hf["transformer.wte.weight"].shape)

torch.Size([50257, 768]) torch.Size([50257, 768])

(sd_hf["lm_head.weight"] == sd_hf["transformer.wte.weight"]).all()

tensor(True)

print(sd_hf["lm_head.weight"].data_ptr())

print(sd_hf["transformer.wte.weight"].data_ptr())

140522065219539 140522065219539

1.11. Model Initialization: std 0.02, residual init¶

- Initialize weights using a normal distribution with

std = 0.02:

nn.init.normal_(param, mean=0.0, std=0.02)

- Residual projections initialized with small values to stabilize training.

class CausalSelfAttention(nn.Module):

def __init__(self, config):

super().__init__()

...

self.c_proj.NANOGPT_SCALE_INIT = 1

class GPT(nn.Module):

def __init__(self, config):

super().__init__()

self.config = config

...

def _init_weights(self, module):

if isinstance(module, nn.Linear):

std = 0.02

if hasattr(module, 'NANOGPT_SCALE_INIT'):

std *= (2 * self.config.n_layer) ** -0.5

torch.nn.init.normal_(module.weight, mean=0.0, std=std)

if module.bias is not None:

torch.nn.init.zeros_(module.bias)

elif isinstance(module, nn.Embedding):

torch.nn.init.normal_(module.weight, mean=0.0, std=0.02)

- Often used in GPT-style models to prevent gradient explosions in early training.

# standard deviation grows inside the residual stream

x = torch.zeros(768)

n = 100 # e.g. 100 layers

for i in range(n):

x += torch.randn(768)

print(x.std())

tensor(9.8334)

# standard deviation grows inside the residual stream

x = torch.zeros(768)

n = 100 # e.g. 100 layers

for i in range(n):

x += n**-0.5 * torch.randn(768)

print(x.std())

tensor(0.9681)

# attempt to autodetect the device

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

torch.manual_seed(1337)

if torch.cuda.is_available():

torch.cuda.manual_seed(1337)

# get a data batch

train_loader = DataLoaderLite(B=4, T=32)

# get logits

model = GPT(GPTConfig())

model.to(device)

# logits, loss = model(x, y)

# print(loss)

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for i in range(50):

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

print(f"step {i}, loss: {loss.item()}")

using device: cuda loaded 338025 tokens 1 epoch = 2640 batches step 0, loss: 10.960028648376465 step 1, loss: 9.687705993652344 step 2, loss: 9.082895278930664 step 3, loss: 9.145988464355469 step 4, loss: 8.626201629638672 step 5, loss: 8.331700325012207 step 6, loss: 8.89795207977295 step 7, loss: 8.837981224060059 step 8, loss: 8.116044044494629 step 9, loss: 8.042159080505371 step 10, loss: 8.38084888458252 step 11, loss: 7.435604572296143 step 12, loss: 7.8245649337768555 step 13, loss: 7.458939552307129 step 14, loss: 7.5318756103515625 step 15, loss: 7.366677761077881 step 16, loss: 7.436798095703125 step 17, loss: 8.293567657470703 step 18, loss: 7.202799320220947 step 19, loss: 7.887030601501465 step 20, loss: 7.505932807922363 step 21, loss: 7.82287073135376 step 22, loss: 6.425383567810059 step 23, loss: 6.877799034118652 step 24, loss: 6.827328205108643 step 25, loss: 6.701854228973389 step 26, loss: 6.814748764038086 step 27, loss: 7.621225833892822 step 28, loss: 7.173999309539795 step 29, loss: 6.947432041168213 step 30, loss: 6.990048885345459 step 31, loss: 7.249020576477051 step 32, loss: 7.1423749923706055 step 33, loss: 7.010761737823486 step 34, loss: 7.922441482543945 step 35, loss: 7.815272808074951 step 36, loss: 7.735034942626953 step 37, loss: 7.712531566619873 step 38, loss: 8.020227432250977 step 39, loss: 7.527308464050293 step 40, loss: 7.416410446166992 step 41, loss: 6.918368339538574 step 42, loss: 7.015596866607666 step 43, loss: 7.060007095336914 step 44, loss: 6.982024669647217 step 45, loss: 7.039826393127441 step 46, loss: 6.0357255935668945 step 47, loss: 6.309432506561279 step 48, loss: 6.953232765197754 step 49, loss: 6.799220085144043

!nvidia-smi

Thu Aug 7 18:39:23 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 50C P0 33W / 70W | 4013MiB / 15360MiB | 96% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 Tesla T4 Off | 00000000:00:05.0 Off | 0 |

| N/A 45C P8 9W / 70W | 3MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

+-----------------------------------------------------------------------------------------+

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

2. Let's Make it Fast.¶

A good reference for Nvidia A100 GPU Specs: "NVIDIA A100 TENSOR CORE GPU: Unprecedented Acceleration at Every Scale"

2.1. GPUs, mixed precision, 1000ms¶

- Training on GPU accelerates matrix operations, enabling efficient computation via parallelism.

- Mixed precision training speeds up training and reduces memory usage using lower-precision floats (

float16,bfloat16) where safe. - PyTorch AMP (

torch.cuda.amp) automates this.

scaler = torch.cuda.amp.GradScaler()

for step in range(max_iters):

with torch.cuda.amp.autocast():

logits = model(x)

loss = F.cross_entropy(logits.view(-1, logits.size(-1)), targets.view(-1))

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

optimizer.zero_grad()

Result: Training loop reduced to ~1000ms/iter on A100 GPU (baseline).

torch.cuda.get_device_name(0)

'Tesla T4'

!nvidia-smi

Thu Aug 7 18:39:24 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 49C P0 35W / 70W | 4013MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 Tesla T4 Off | 00000000:00:05.0 Off | 0 |

| N/A 45C P8 9W / 70W | 3MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

+-----------------------------------------------------------------------------------------+

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

# attempt to autodetect the device

import time

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

torch.manual_seed(1337)

if torch.cuda.is_available():

torch.cuda.manual_seed(1337)

# get a data batch

train_loader = DataLoaderLite(B=4, T=1024) # reduced batch size from 16 to ensure GPU fit (avoid out-of-memory error)

# get logits

model = GPT(GPTConfig())

model.to(device)

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for i in range(50):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

torch.cuda.synchronize() # wait for GPU to finish all scheduled work above

t1 = time.time()

dt = (t1 - t0) * 1000 # time difference in milliseconds

tokens_per_sec = (train_loader.B * train_loader.T) / (t1 - t0)

print(f"step {i}, loss: {loss.item()}, dt: {dt:.2f}ms, tok/sec: {tokens_per_sec:.2f}")

using device: cuda loaded 338025 tokens 1 epoch = 82 batches step 0, loss: 10.928552627563477, dt: 1219.75ms, tok/sec: 3358.08 step 1, loss: 9.525257110595703, dt: 1146.88ms, tok/sec: 3571.42 step 2, loss: 8.98608684539795, dt: 1153.07ms, tok/sec: 3552.26 step 3, loss: 8.6998929977417, dt: 1152.42ms, tok/sec: 3554.25 step 4, loss: 8.393763542175293, dt: 1154.75ms, tok/sec: 3547.08 step 5, loss: 8.022571563720703, dt: 1155.55ms, tok/sec: 3544.63 step 6, loss: 7.91090726852417, dt: 1157.10ms, tok/sec: 3539.90 step 7, loss: 7.710432052612305, dt: 1161.94ms, tok/sec: 3525.13 step 8, loss: 7.629523277282715, dt: 1159.35ms, tok/sec: 3533.02 step 9, loss: 7.342772006988525, dt: 1159.44ms, tok/sec: 3532.75 step 10, loss: 7.3585638999938965, dt: 1142.60ms, tok/sec: 3584.80 step 11, loss: 7.35311222076416, dt: 1160.71ms, tok/sec: 3528.88 step 12, loss: 7.409618854522705, dt: 1165.44ms, tok/sec: 3514.55 step 13, loss: 7.307405471801758, dt: 1166.81ms, tok/sec: 3510.44 step 14, loss: 6.9155988693237305, dt: 1174.30ms, tok/sec: 3488.04 step 15, loss: 6.930902481079102, dt: 1175.51ms, tok/sec: 3484.45 step 16, loss: 6.717788219451904, dt: 1179.52ms, tok/sec: 3472.59 step 17, loss: 6.539855480194092, dt: 1178.49ms, tok/sec: 3475.62 step 18, loss: 6.674693584442139, dt: 1183.97ms, tok/sec: 3459.56 step 19, loss: 6.670464515686035, dt: 1184.05ms, tok/sec: 3459.31 step 20, loss: 6.871865749359131, dt: 1190.47ms, tok/sec: 3440.67 step 21, loss: 6.718591690063477, dt: 1190.53ms, tok/sec: 3440.48 step 22, loss: 6.653460502624512, dt: 1191.97ms, tok/sec: 3436.33 step 23, loss: 6.747048854827881, dt: 1179.44ms, tok/sec: 3472.84 step 24, loss: 6.787980556488037, dt: 1184.74ms, tok/sec: 3457.31 step 25, loss: 6.768372058868408, dt: 1194.10ms, tok/sec: 3430.21 step 26, loss: 6.593522548675537, dt: 1191.67ms, tok/sec: 3437.18 step 27, loss: 6.649495601654053, dt: 1198.53ms, tok/sec: 3417.53 step 28, loss: 6.6570963859558105, dt: 1198.16ms, tok/sec: 3418.57 step 29, loss: 6.5045037269592285, dt: 1200.70ms, tok/sec: 3411.35 step 30, loss: 6.420934677124023, dt: 1206.80ms, tok/sec: 3394.11 step 31, loss: 6.367345809936523, dt: 1207.12ms, tok/sec: 3393.21 step 32, loss: 6.426124572753906, dt: 1216.39ms, tok/sec: 3367.35 step 33, loss: 6.559837341308594, dt: 1217.51ms, tok/sec: 3364.25 step 34, loss: 6.557112693786621, dt: 1218.27ms, tok/sec: 3362.14 step 35, loss: 6.5374274253845215, dt: 1221.94ms, tok/sec: 3352.05 step 36, loss: 6.357591152191162, dt: 1227.32ms, tok/sec: 3337.36 step 37, loss: 6.514996528625488, dt: 1234.14ms, tok/sec: 3318.91 step 38, loss: 6.320087432861328, dt: 1236.36ms, tok/sec: 3312.96 step 39, loss: 6.1555914878845215, dt: 1233.63ms, tok/sec: 3320.27 step 40, loss: 6.273041248321533, dt: 1243.08ms, tok/sec: 3295.05 step 41, loss: 6.372799396514893, dt: 1242.23ms, tok/sec: 3297.29 step 42, loss: 6.222573280334473, dt: 1244.52ms, tok/sec: 3291.22 step 43, loss: 6.214957237243652, dt: 1236.55ms, tok/sec: 3312.45 step 44, loss: 6.36059045791626, dt: 1257.36ms, tok/sec: 3257.63 step 45, loss: 6.262412071228027, dt: 1256.21ms, tok/sec: 3260.60 step 46, loss: 6.1164021492004395, dt: 1264.38ms, tok/sec: 3239.54 step 47, loss: 6.135281085968018, dt: 1262.08ms, tok/sec: 3245.42 step 48, loss: 6.152599334716797, dt: 1255.24ms, tok/sec: 3263.12 step 49, loss: 6.059423923492432, dt: 1273.51ms, tok/sec: 3216.30

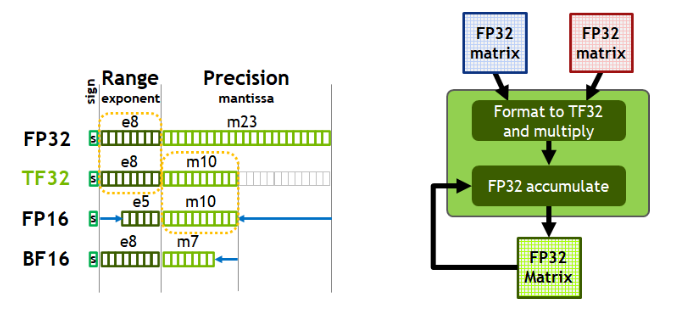

2.2. Tensor Cores, Timing the Code, TF32 precision, 333ms¶

- NVIDIA Tensor Core is just an instruction in the A100 architecture.

- It does 4x4 matrix multiplication with multiple configurations of different precisions (output and input precision).

- Tensor Cores speed up matrix multiplication on GPUs.

TF32format (default on Ampere GPUs like A100) gives the performance/precision ofFP16with the safety/range ofFP32.TF32uses the same 10-bit mantissa precision as half-precision (FP16) math, which is much higher than the precision requirements of AI workloads, with enough margin.- At the same time,

TF32uses the same 8-bit exponent asFP32, which can support the same numerical/digital range. - This combination makes

TF32an excellent alternative toFP32for single-precision math , especially for the massive multiply-accumulate calculations that are at the heart of deep learning and many High Performace Computing (HPC) applications. TF32strikes a balance between performance, range, and precision.

Figure 3. TensorFloat-32 (TF32). (Source)

Enable TF32 in PyTorch:

torch.set_float32_matmul_precision('high')

Time the training step:

start = time.time()

... # training loop

end = time.time()

print("step time:", end - start)

Step time drops to ~333ms when using

TF32.

Figure 4. Tensor Cores: Fast Matrix Multiply-Add (FMMA) with FP16 Input and FP32 Compute Capabilities. (Source)

# attempt to autodetect the device

import time

device = "cpu"

if torch.cuda.is_available():

device = "cuda"

elif hasattr(torch.backends, "mps") and torch.backends.mps.is_available():

device = "mps"

print(f"using device: {device}")

torch.manual_seed(1337)

if torch.cuda.is_available():

torch.cuda.manual_seed(1337)

# get a data batch

train_loader = DataLoaderLite(B=4, T=1024) # reduced batch size from 16 to ensure GPU fit (avoid out-of-memory error)

torch.set_float32_matmul_precision('high')

# get logits

model = GPT(GPTConfig())

model.to(device)

# optimize!

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

for i in range(50):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

logits, loss = model(x, y)

loss.backward()

optimizer.step()

torch.cuda.synchronize() # wait for GPU to finish all scheduled work above

t1 = time.time()

dt = (t1 - t0) * 1000 # time difference in milliseconds

tokens_per_sec = (train_loader.B * train_loader.T) / (t1 - t0)

print(f"step {i}, loss: {loss.item()}, dt: {dt:.2f}ms, tok/sec: {tokens_per_sec:.2f}")